1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

| ometa BinaryParser <: Parser {

// Portable Network Graphics (PNG) Specification (Second Edition)

// http://www.w3.org/TR/PNG/

// Note: not all chunk are defined, this is just a POC

//entire PNG stream

START = [header:h (chunk+):c number*:n] -> [h,c,n],

//chunk definition

chunk = int4:len str4:t apply(t,len):d byte4:crc

-> [#chunk, [#type, t], [#length, len], [#data, d], [#crc, crc]],

//chunk types

IHDR :len = int4:w int4:h byte:dep byte:type byte:comp byte:filter byte:inter

-> {type:"IHDR", data:{width:w, height:h, bitdepth:dep, colortype:type, compression:comp, filter:filter, interlace:inter}},

gAMA :len = int4:g -> {type:"gAMA", value:g},

pHYs :len = int4:x int4:y byte:u -> {type:"pHYs", x:x, y:y, units:u},

tEXt :len = repeat('byte',len):d -> {type:"tEXt", data:toAscii(d)},

iTXt :len = repeat('byte',len):d -> {type:"iTXt", data:toShortAscii(d)},

tIME :len = int2:y byte:mo byte:day byte:hr byte:min byte:sec

-> {type:"tIME", year:y, month:mo, day:day, hour:hr, minute:min, second:sec},

IDAT :len = repeat('byte',len):d -> {type:"IDAT", data:"omitted"},

IEND :len = repeat('byte',len):d -> {type:"IEND"},

//useful definitions

byte = number,

header = 137 80 78 71 13 10 26 10 -> "PNG HEADER", //mandatory header

int2 = byte:a byte:b -> byteArrayToInt16([b,a]), //2 bytes to a 16bit integer

int4 = byte:a byte:b byte:c byte:d -> byteArrayToInt32([d,c,b,a]), //4 bytes to 32bit integer

str4 = byte:a byte:b byte:c byte:d -> toChunkType([a,b,c,d]), //4 byte string

byte4 = repeat('byte',4):d -> d,

END

}

BinaryParser.repeat = function(rule, count) {

var ret = [];

for(var i=0; i<count; i++) {

ret.push(this._apply(rule));

}

return ret;

}

toAscii = function(byteArray) {

var foo = String.fromCharCode.apply(String, byteArray);

console.log ("String:" + foo + " (...byteArrayOmitted...)");

return foo;

}

toShortAscii = function(byteArray) {

var embeddedText = String.fromCharCode.apply(String, byteArray);

// The iTxt chunk can contain a lot of text/xml, so truncate for proof of concept

console.log ("String:" + embeddedText.slice(1, 51) + " (...only first 50 bytes shown...)");

return embeddedText;

}

toChunkType = function(byteArray) {

var aChuckType = String.fromCharCode.apply(String, byteArray);

console.log ("ChunkType :" + aChuckType + " : " + byteArray );

return aChuckType;

}

byteArrayToInt32 = function(localByteArray) {

var uint8array = new Uint8Array(localByteArray);

var uint32array = new Uint32Array(

uint8array.buffer,

uint8array.byteOffset + uint8array.byteLength - 4,

1 // 4Bytes long

);

var newInt32 = uint32array[0];

console.log ( "i32 : " + newInt32 + " <= " + localByteArray );

return newInt32;

}

byteArrayToInt16 = function(byteArray) {

var ints = [];

alert(byteArray.length);

for (var i = 0; i < byteArray.length; i += 2) {

//ints.push((byteArray[i] << 8) | (byteArray[i+1]));

}

console.log (ints);

return ints;

}

fetchBinary = function() {

var req = new XMLHttpRequest();

req.open("GET","http://sushihangover.azurewebsites.net/Content/Static/IronyLogoSmall.png",true);

req.responseType = "arraybuffer";

req.onload = function(e) {

console.log("loaded");

var buf = req.response;

if(buf) {

var byteArray = new Uint8Array(buf);

console.log("got " + byteArray.byteLength + " bytes");

var arr = [];

for(var i=0; i<byteArray.byteLength; i++) {

arr.push(byteArray[i]);

}

// watch out if you uncomment the next line, it can kill your browser w/ large png files

// console.log(arr);

var parserResults = BinaryParser.match(arr, "START");

console.log(parserResults);

}

}

req.send(null);

};

fetchBinary();

|

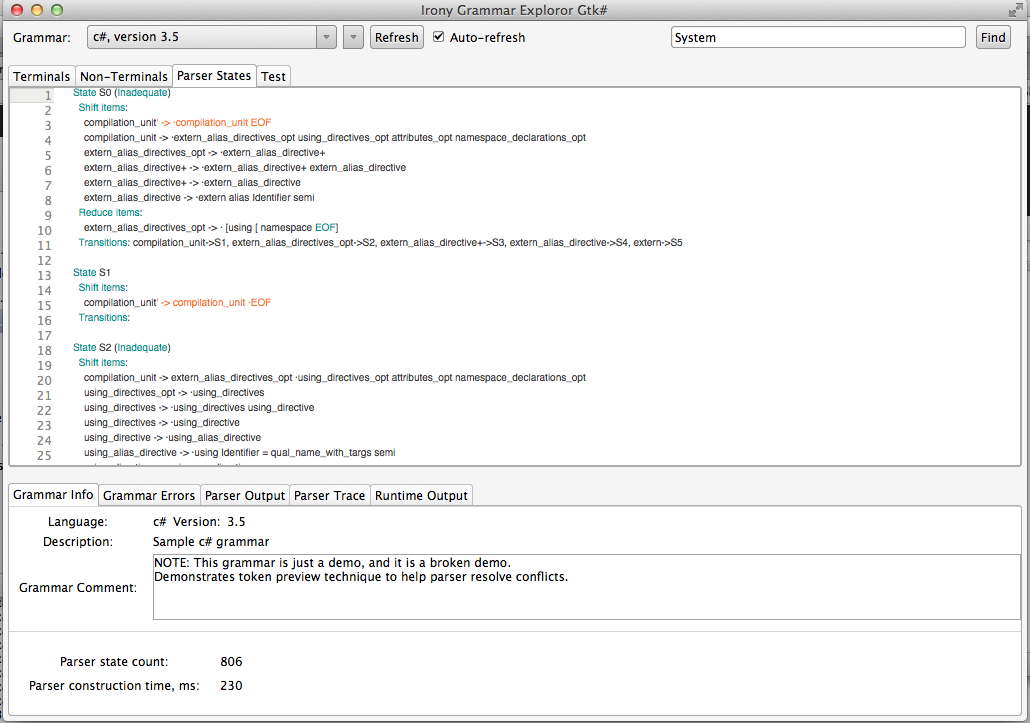

I was working on a Irony/C# based DSL that I wrote awhile back and noticed that I had some strange NameSpace issues with the GTK UI (exposed only within Xamarin’s Stetic Designer, not sure how those naming conflicts were not a compile time error…).

I was working on a Irony/C# based DSL that I wrote awhile back and noticed that I had some strange NameSpace issues with the GTK UI (exposed only within Xamarin’s Stetic Designer, not sure how those naming conflicts were not a compile time error…).

I am assuming with removal of PlayScript’s public repo on GitHub that the project is either becoming a commercial offering from Xamarin (or Zynga) and future releases will have a license change?

I am assuming with removal of PlayScript’s public repo on GitHub that the project is either becoming a commercial offering from Xamarin (or Zynga) and future releases will have a license change?